IUCN/SSC Otter Specialist Group Bulletin

©IUCN/SCC Otter Specialist Group

Volume 40 Issue 4 (October 2023)

Citation: Kistner, F., Slaney, L. and Morant, N. (2023). Can You Tell the Species by a Footprint? - Identifying Three of the Four Sympatric Southeast Asian Otter Species using Computer Vision and Deep Learning. IUCN Otter Spec. Group Bull. 40 (4): 197 - 210

Can You Tell the Species by a Footprint? - Identifying Three of the Four Sympatric Southeast Asian Otter Species using Computer Vision and Deep Learning

Frederick Kistner1,2*, Larissa Slaney2,3, and Nicolas Morant4,5

1Institute for Photogrammetry (IPF), Institute for Technology Karlsruhe (KIT), Germany

2WildTrack Specialist Group (WSG)

3Institute for Life and Earth Sciences, School of Energy, Geosciences, Infrastructure and Society, Heriot-Watt University, Edinburgh, UK

4Harvard University

5WildTrack

*Corresponding Author Postal Address: Photogrammetrie und Fernerkundung (IPF) Karlsruher Institut für Technologie (KIT), Deutschland; Email: frederick.kistner@kit.edu

Received 12th May 2023, accepted 21st July 2023

Abstract: Southeast Asia is home to four otter species, all with decreasing population trends. All four of Southeast Asia’s otter species can coexist sympatrically and are on the International Union for Conservation of Nature’s (IUCN) Red List of Endangered Species. There are knowledge gaps in the distribution range and population sizes of these elusive species, which is essential information for the implementation of effective conservation measures. Footprints can be a cost-effective, non-invasive way to collect relevant data. WildTrack has developed a Footprint Identification Technology (FIT) classification model that uses landmark-based measurements as input data. This model is highly accurate at distinguishing between three of the four otter species in Southeast Asia. In this study, we propose a deep learning-based approach that automates the classification of species by analyzing the area within bounding boxes placed around footprints. The method significantly reduces the processing time and eliminates the need for highly skilled operators placing landmark points on footprints.

To train the model, 2,562 images with 3,895 annotated footprints were used, which resulted in an impressive accuracy, precision, and recall of 99% on both training and test sets. Furthermore, the model's performance was tested on a new set of 431 footprints, which were not used in the training process, and only 4 of them were incorrectly classified, demonstrating the effectiveness of the proposed approach on unseen data. The research findings of this study confirm the viability of using a machine learning model-based approach to accurately identify otter species through their footprints. This approach is both reliable and cost-effective, which makes it an attractive tool for otter monitoring and conservation efforts in Southeast Asia. Additionally, the method has significant potential for application in community-based citizen science monitoring programs. Further research could focus on expanding the scope of the study by adding footprints from hairy-nosed otters, as well as sympatric non-otter species, to the training database. Furthermore, this study suggests developing an object detection model and training new classification models that predict sex or re-identify individuals using a larger set of images of known (captive) individuals.

Keywords: Eurasian otter, Lutra lutra, Small-clawed otter, Aonyx cinereus, Smooth-coated otter, Lutrogale perspicillata, tracks, non-invasive animal monitoring

INTRODUCTION

There are four species of otter that share the same general geographic range in Southeast Asia. These are the Asian small-clawed otter (Aonyx cinereus), the Eurasian otter (Lutra lutra), the Hairy-nosed otter (Lutra sumatrana), and the Smooth-coated otter (Lutrogale perspicillata). They are listed on the IUCN’s Red List of Endangered Species and all four species are suffering from declining population trends (Duplaix and Savage, 2018).

Otters are an important flagship species for conservation (Stevens et al., 2011) and indicator species for water and wetland health (Bhandari and GC, 2008a). Some even describe them as keystone species (Basnet et al., 2020; Bhandari and GC, 2008b). In Southeast Asia, otters face numerous threats to their survival, including habitat loss and degradation (de Silva, 2011; Foster-Turley, 1992), hunting, poaching, illegal wildlife trade (Feeroz, 2015; Soe, 2022), and climate change (Cianfrani et al., 2018), as well as pollution and human-otter conflicts (Gomez and Bouhuys, 2018; Yoxon and Yoxon, 2017). There are knowledge gaps of the distribution range and population sizes of these elusive species in Southeast Asia, which is essential information for the implementation of effective conservation measures (Duplaix and Savage, 2018).

Effective conservation efforts require knowledge of a species' distribution and abundance to understand their ecological needs and develop targeted conservation strategies. This is particularly important in areas where little or no data is available and therefore conservation needs are difficult to judge (Duplaix and Savage, 2018). Under Objective 4 of the Global Otter Conservation Strategy by the IUCN’s Otter Specialist Group, noninvasive survey methods, such as camera-trapping, spraint analysis and environmental DNA (eDNA) analysis are recommended (Duplaix and Savage, 2018). In addition, a novel, non-invasive and cost-effective PCR-RFLP eDNA method has recently been developed for otter, which relies on finding otter spraints for analysis (Sharma et al., 2022).

Despite the benefits of noninvasive survey methods, challenges remain in identifying otter species. Tracks can be a valuable and cost-effective source of noninvasive data and have the benefit that they can be collected by local communities and citizen scientists, which can enhance public awareness and engagement in conservation (Danielsen et al., 2005). They can however be difficult to find and interpret, particularly in dense or rugged environments. Even experienced field observers can misidentify tracks, with a misclassification rate of 44% reported for North American river otters (Evans et al., 2009). However, digital images of tracks can be analyzed later by an expert and, when taken in a standardized way with a scale, can be used to extract morphometric measurements for classification purposes.

WildTrack is a prominent research group in footprint identification and has published extensively on footprint analysis for many endangered and elusive species using their highly accurate Footprint Identification Technology (FIT). Several studies have demonstrated the effectiveness of morphometric analysis using digital images of tracks for species identification (S. K. Alibhai et al., 2008; De Angelo et al., 2010; Kistner et al., 2022), sex determination (S. Alibhai et al., 2017; Gu et al., 2014; Li et al., 2018), individual identification (S. Alibhai et al., 2017; S. K. Alibhai et al., 2008, 2023; Jewell et al., 2016; Li et al., 2018), and population size estimation (S. K. Alibhai et al., 2023; Jewell et al., 2020).

In a previous study, we used morphometric measurements derived from footprints of Lutra lutra, Lutrogale perspicillata, and Aonyx cinereus to build an XG boost classifier and achieved an overall species classification accuracy of 91% on new unseen test data (Kistner et al., 2022).

However, even though these morphometrics models perform well with a small amount of data, they have limitations. These include the requirement of an expert to set the morphometric extraction landmark points and the inability of the model to account for variations within a species that are not represented within the previously defined measurements. To address these limitations, computer vision techniques such as convolutional neural networks (CNNs) have been utilized successfully in various wildlife conservation efforts, including age prediction of pandas (Zang et al., 2022), species identification in camera trap images (Carl et al., 2020; Wägele et al., 2022; Willi et al., 2019) and individual animal recognition (Chen et al., 2020; Hansen et al., 2018). These techniques offer advantages in speed, automation, and cost-effectiveness for species identification in conservation applications (Wäldchen and Mäder, 2018).

This study builds on previous research (Kistner et al., 2022) and investigates the possibility of distinguishing between three otter species by their footprints with a deep learning approach. Here we aim to address the limitations of previous morphometric approaches and explore the use of CNNs for the identification of otter species in Southeast Asia using digital images of tracks. As far as we know, this is the first study to use a method that combines traditional track identification with computer vision and machine learning to classify otter tracks with high accuracy, allowing for intra-species variation. We demonstrate the potential of this technique as a valuable tool for otter conservation efforts in the region by achieving accurate species identification.

METHODS

Ethics Statement

The majority of data collection for this study was carried out in zoos and otter sanctuaries by either professional wild animal keepers or under their supervision.

Keepers followed their normal working practice and followed working risk assessments. Data collection involved the non-invasive collection of digital otter footprint images found on substrates in the animals’ enclosures. All animals walked across these natural substrates out of their own free will. This non-invasive approach avoided any direct contact with the animals to minimize any potential risks associated with handling them. This also ensured the animals left the footprints whilst displaying their natural behavior. Footprint data found in the field was collected after the wild otters had left the area, thus avoiding any stress on the wild animals and keeping both researchers and animals safe. Therefore, our data collection method was ethical and non-invasive, aligning with the principles of animal welfare.

Data Collection

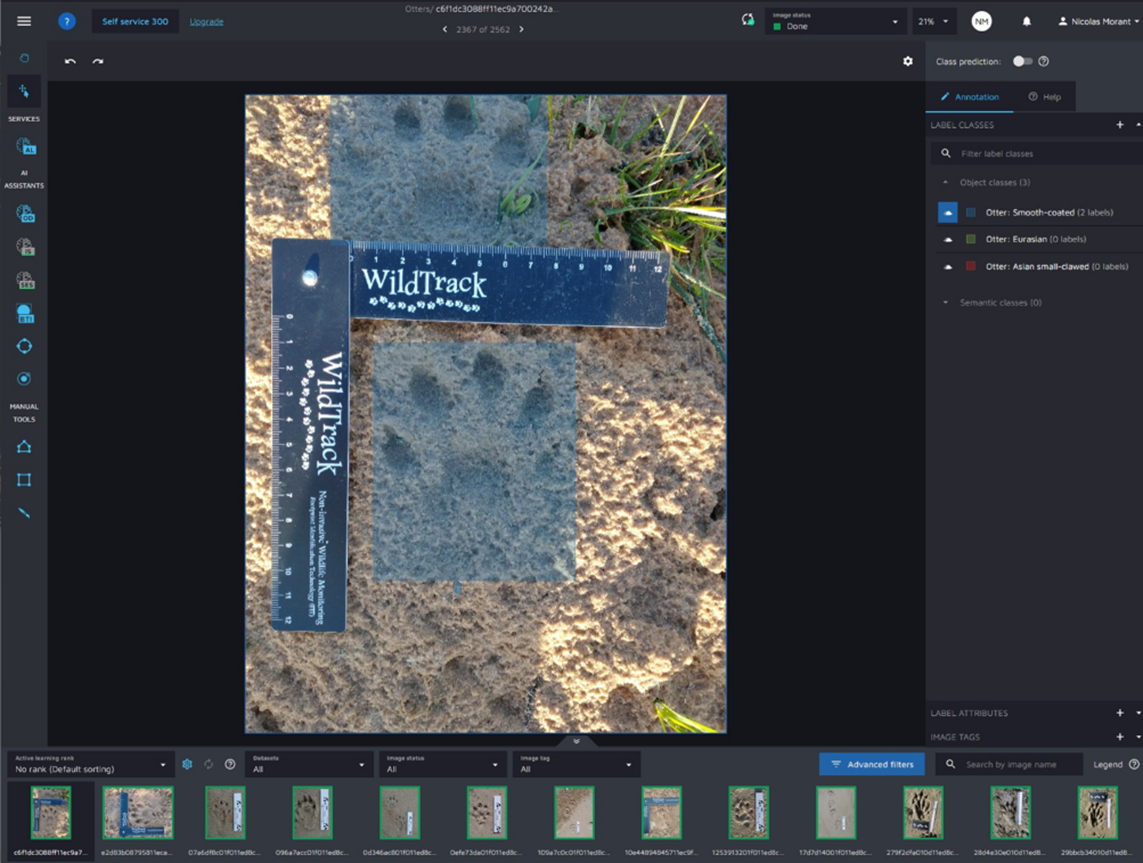

In this study, we only used images with a known species identity. Therefore, we collected images from captive otters and added field prints from Eurasian otters in Portugal where only one species of the three is present. Smooth-coated otter prints were collected in-situ after visual identification of the species. In total, we received a dataset of 2,562 images from over 20 participants, each contributing one or more otter footprints. The data collection involved 17 zoos and otter sanctuaries in Germany, the UK, Austria and France. Figure 1 shows data collection of otter footprint images following the FIT protocol at a UK zoo. To ensure the quality of the images, we rated them on a scale of 1 (poor) to 5 (excellent). Most of the images were collected following the FIT protocol for collecting otter footprints (Kistner et al., 2022) and included a metric ruler for scale, but images with multiple footprints taken from various heights, some of which did not feature a scale, were also included.

Data Annotation

To annotate images, we used Hasty, a CloudFactory company, which helped us streamline the process of labeling images by creating an accurate ground truth of our data at a much faster rate. The platform's user-friendly interface and built-in tools made it easy for our team to preprocess and label the data efficiently, thereby reducing the amount of time and resources required for this crucial step in training our models. Specifically, we annotated 3,895 footprints with known species identities by placing bounding boxes around them. This included 1,138 Aonyx cinereus, 479 Lutrogale perspicillata, and 2,278 Lutra lutra. We annotated all four feet in the images as part of the annotation process. Figure 2 shows a screenshot illustrating data annotation in Hasty.

Model Training

Additionally, Hasty’s support for a wide range of algorithms and frameworks, as well as its ability to train models on cloud-based resources, has allowed us to experiment with different approaches and find the best solution for our needs. Furthermore, the monitoring and analyzing model performance feature allowed us to track progress and identify areas for improvement. This helped us to optimize model training and ultimately improve the accuracy of our models.

We developed an image label classification model using the labeled bounding boxes containing the otters’ footprints with the objective of identifying the specific otter species. By providing only the bounding boxes image area, the model could focus on this specific region of interest for making the classification more accurate. This technique is especially useful as a lot of our images contain multiple footprints within one image.

To train our models, we divided the data into three sets: a training set (80%), a validation set (10%), and a test set (10%). To ensure that our data was split in a representative manner, we employed stratification, which divided the data into approximately equal proportions of the target classes. The training set was used to train the different models, while the validation set was used to evaluate performance and optimize parameters. Finally, the test set was used to assess the overall performance of the models on unseen data.

We selected the ResNet-18 CNN architecture (He et al., 2016) due to its good trade-off between accuracy, recall, and training duration. This architecture is suitable for small datasets like ours since it has fewer parameters in comparison to other CNN architectures like ResNet34, ResNet50, or larger models, which require more resources and longer training time ultimately resulting in higher costs for model training. We used the AdamW optimizer (Loshchilov and Hutter, 2017) which uses the Adam algorithm with weight decay regularization to prevent overfitting. We also applied, as our only data transformation step, resizing the input images to 512x512 pixels. It is beyond the scope of this paper to go further into detail of CNN architecture and computational need for model training.

In addition, to improve the overall performance of our models, we tuned various hyperparameters. Firstly, we adjusted the datasets by selecting different ratings of the images used in our model. Secondly, we adjusted the base learning rate of our model to slowly tune its parameters and optimize its performance. Additionally, we used a scheduler to adjust the learning rate over time to allow our model to adjust to the changing data and improve its efficiency. Lastly, we adjusted the batch size to fine tune the model as shown in Table 1. These combined adjustments resulted in us being able to produce accurate multi-label classification models.

RESULTS

Model Selection

The results of the different models are presented in Table 1, with the “Accuracy Train” column displaying the accuracy on the training data, the “Accuracy Val” column showing the accuracy on the validation data, the “Avg Loss Train” column displaying the average loss on the training data, and the “Avg Loss Val” column showing the average loss on the validation data. We selected the best model for images rated 5, 4, and 3 and the best model for all images, based on their performance on the validation set and were further evaluated on the holdout test set.

The model that performed the best for images with ratings 5, 4, and 3 had a base learning rate of 0.0001, employed the ReduceLROnPlateau scheduler, had a train batch size of 64, and a test batch size of 24. This model displayed exceptional results, achieving an accuracy of 100% on the training set, 99.66% on the validation set, and a negligible average loss of 0.00 on the training set and 0.01 on the validation set.

The best model for all images had a base learning rate of 0.0001, used the ReduceLROnPlateau scheduler, had a train batch size of 64, and a test batch size of 24. This model had a mAP accuracy of 99.44% on the training set, 99.07% on the validation set, and an average loss of 0.00 on the training data and 0.02 on the validation data. A full display of all further hyperparameters is displayed in the table above.

The model that performed the best for all images had comparable results to the model using only high-quality images. Therefore, we only present the performance of the model using all images on the test set, as it includes a broader range of images and is likely to have a greater level of generalizability.

Model Evaluation Test Set

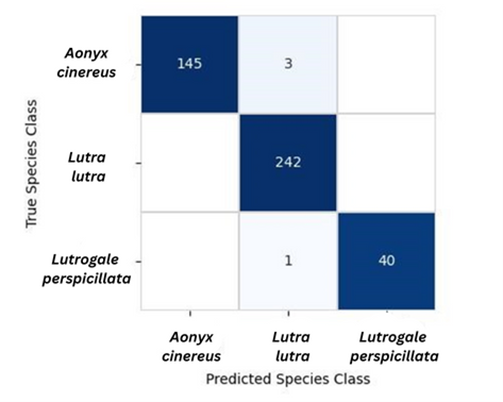

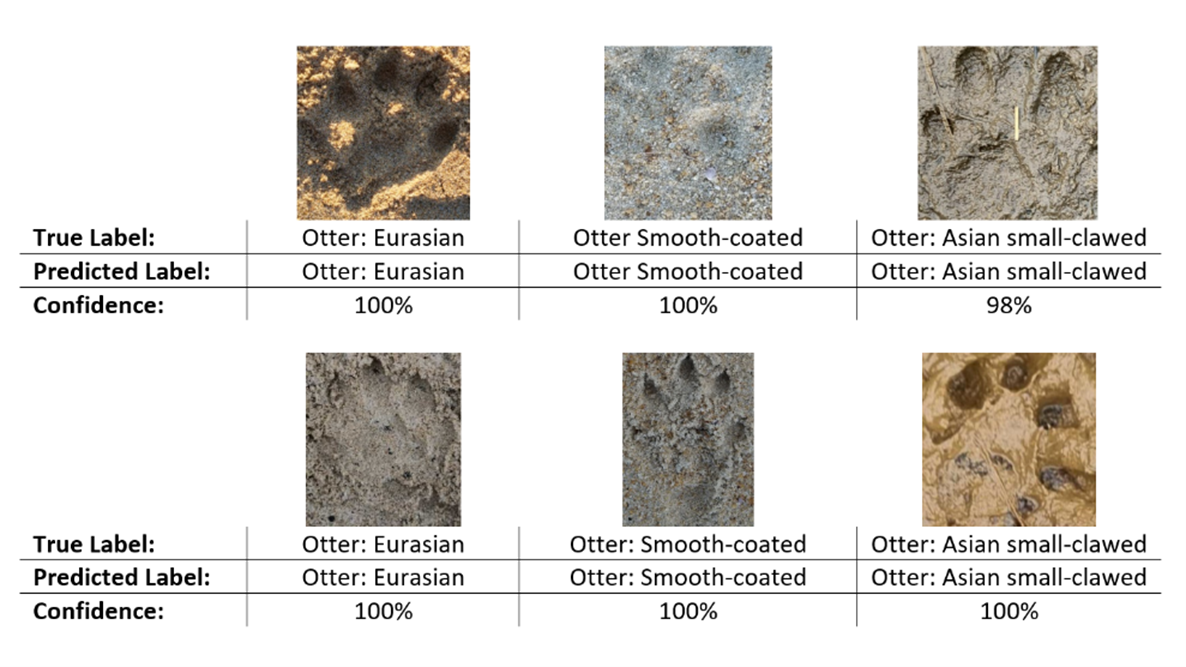

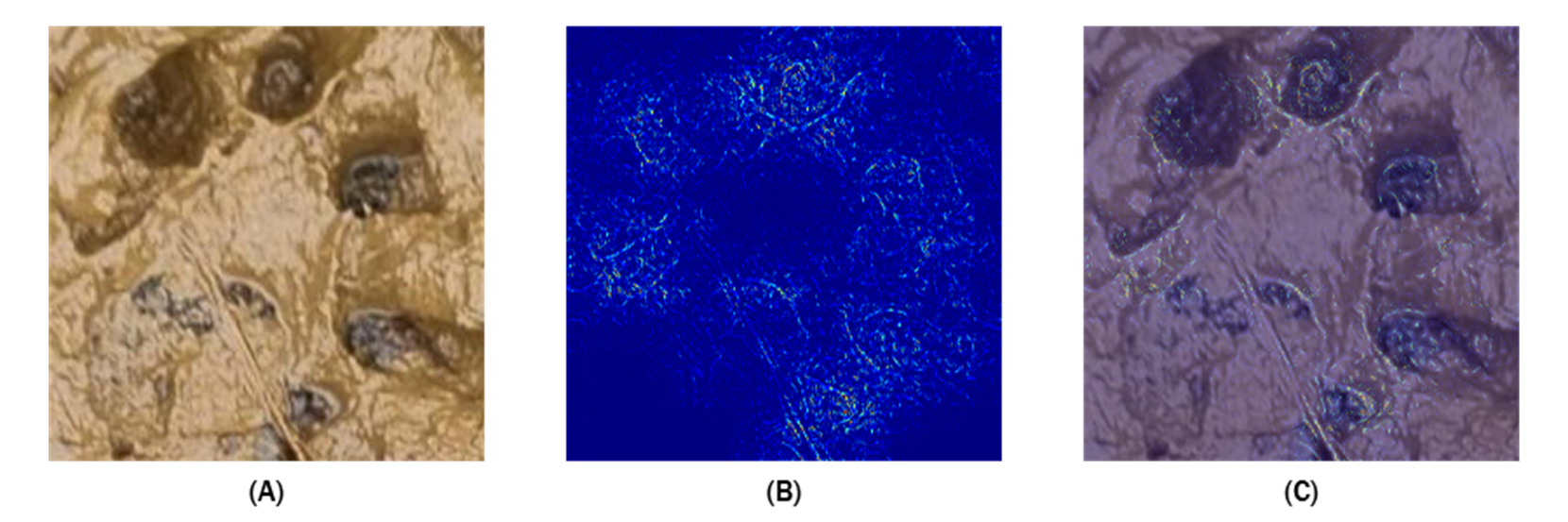

The performance of the model on the test set, which consists of previously unseen data, is displayed in this confusion matrix (Figure 3). It illustrates the number of accurate and inaccurate predictions made by the model for each species. For instance, the model correctly identified 40 instances of the Otter: Smooth-coated species, and it made one misclassification of this species. Additionally, it correctly identified 242 instances of the Otter: Eurasian species and had no incorrect predictions of this species. Likewise, it correctly identified 145 instances of the Otter: Asian small-clawed species but made 3 erroneous predictions of this species. Examples of these predictions are shown in Figure 4. Figure 5 is a saliency map, illustrating an exemplary visual representation of regions of importance for the classification model within this particular footprint.

In conclusion, the model’s performance on the test set is relatively strong, with a high number of correct predictions for each species, but it also highlights the need for potential improvements in regard to the low number of incorrect predictions.

Table 2 displays the performance of the top model on the test set, which was trained using all images. The first column displays the number of times the model correctly identified each species (True Positive count). The second column displays the number of times the model incorrectly identified each species (True False count). The third column displays the number of times the model failed to identify each species (False Negative count). The fourth column shows the precision of each species, which is the percentage of correctly identified instances among all instances that were identified as that species. The fifth column shows the recall of each species, which is the percentage of correctly identified instances among all instances that actually belong to that species. The sixth column shows the micro-averaged F1 score, which represents the overall performance of the model. The seventh column shows the macro-averaged F1 score, which represents the model's performance across all species. The last column shows the weighted F1 score, which takes into account the relative importance of each species. The model performed well overall, with all the above metrics achieving a score of 0.99.

| Table 2: Best Model (All Images) Classification Performance Table on Unseen Data | ||||||||

| TP | TF | FN | Precision | Recall | Micro F1 | Macro F1 | Weighted F1 | |

| Model | 427 | 4 | 4 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

Table 3 shows the performance of our top classification model, which was trained on all images, in identifying three of the four Southeast Asian otter species. It reveals that the model has a high level of precision and recall for each species. The F1-Score, a measure of the balance between precision and recall, is also high, which means that the model is performing well in both identifying the species and minimizing false identifications. Overall, the table indicates that the model is effectively distinguishing between the three different types of otter species.

DISCUSSION

The recent Malaysia Otter Workshop 2022, jointly organized by the Malaysia Otter Network, Malaysian Nature Society and the International Otter Survival Fund, highlighted the need for conducting otter surveys across Southeast Asia in light of the pressing issues of habitat degradation, pollution, human-otter conflict and the illegal trade of otter pets and fur (Yoxon, 2022). Given the scarcity of precise information on otter species distribution, it is crucial to carry out official and accurate surveys.

Our study demonstrates that using this non-invasive, low-cost and effective method of standardized, machine-learning-based footprint analysis provides an accurate survey method. Furthermore, to enhance the quality and quantity of available data, engaging local communities, especially those with Traditional Ecological Knowledge (TEK), could be a valuable approach. By incorporating the insights of these communities, a more comprehensive understanding of the current status of otters in the region could be attained.

Accurate baseline data is crucial for conservation efforts, but identifying elusive species like otters can be challenging. To tackle this challenge, we have developed a promising novel approach that leverages the advantages of CNN-based computer vision and ResNet models for identifying otter species using their footprints. Our approach has yielded promising results, outperforming our previous study (Kistner et al., 2022) in terms of performance scores on a larger dataset as well as reducing the time required for data labeling. Our study found that it is possible to distinguish between three sympatric otter species by analyzing their footprints using a partially automated computer vision approach. Furthermore, we believe the use of bounding boxes instead of morphometric landmark points has potentially reduced operator bias, thereby enhancing the reliability of our findings. We are optimistic that this innovative technique can pave the way for more effective monitoring and conservation of otters and other elusive species, while linking with TEK across communities globally, and linking in-situ and ex-situ species conservation and research. However, it should be noted that our current model is limited to predicting the three otter species it is trained on, even if a track originates from a non-otter species. To overcome this limitation, future research could focus on training the model on a comprehensive regional database that includes tracks and signs of all species present in that specific region. Alternatively, a model could be developed to identify new classes once the data discrepancy reaches a certain threshold. Additionally, our approach is not entirely automated, as bounding boxes need to be manually set. Future research could enhance the methodology by incorporating object detection, footprint quality, and scale estimation models built into an entire machine learning pipeline, enabling a fully automated method. The integration of such an approach into a smartphone-based application with inference could potentially enhance user experience.

On the technical side, future research could explore the minimum quality criteria for footprints necessary for successful application of our approach. Additionally, our technique could be extended to benefit other species beyond otters, and data collection could involve not only professional trackers and zoos, but also local/indigenous people and citizen scientists. The participation of indigenous people and locals in conservation efforts is essential as they are key stakeholders in these areas and their involvement could potentially lead to job creation and the incorporation of Traditional Ecological Knowledge (TEK) into conservation initiatives (Danielsen et al., 2014; Ponce-Martins et al., 2022). This would not only foster greater community engagement but also promote the preservation of local ecosystems and their biodiversity.

From an ecological perspective, our approach could be used to look for new target classes, such as sex, age class, and individual identification, as demonstrated previously for other species with morphometrics (S. Alibhai et al., 2017; S. K. Alibhai et al., 2023; Jewell et al., 2016, 2020; Li et al., 2018). For instance, we could integrate hairy-nosed otters for the Southeast Asian region, create a method for individual identification, and develop an approach for other otter species. Footprint analysis could also aid in mitigating human-otter conflict by identifying the specific otter species or even the individual otter that is part of the conflict situation, such as conflict with commercial and subsistence fish farming (Duplaix and Savage, 2018; Shrestha et al, 2022) This would not only facilitate better management of human-otter interactions but also enhance our understanding of otter behavior and ecology.

CONCLUSION

In conclusion, our approach has significant potential to improve our understanding of otter species distribution and behavior, thus providing valuable insights for conservation efforts aimed at safeguarding these charismatic and ecologically important species. By enabling more accurate and efficient identification of otter species, our approach could potentially facilitate more targeted conservation strategies, such as habitat protection and restoration, and the identification and mitigation of threats like pollution and poaching. Ultimately, this could help to preserve these animals’ populations and the ecosystems they inhabit for future generations.

Currently, public use of the model is not possible due to concerns about protecting endangered species from potential misuse by poachers. However, we are working on developing a secure platform where verified biologists can register, submit images for inference, and receive results within seconds. In the future, this functionality will also be available through a mobile app, even in areas without internet access. This development is still in progress and will take time before it becomes available to users.

Acknowledgements: We would like to express our deep gratitude to Prof. Dr. Ing. Stephan Hinz for his invaluable guidance and support throughout the course of this study. Furthermore, we extend our sincere thanks to Deutsche Bundesstiftung Umwelt (DBU) and Karlsruhe House of Young Scientists (KHYS) for their generous financial support, which made this research possible. We express our utmost gratitude to Zoe Jewell and Sky Alibhai from WildTrack for their invaluable support and the remarkable WildTrack AI footprint database they have initiated.

We extend our heartfelt appreciation to the zoos that have generously contributed to our research. We would like to express our gratitude to Colchester Zoo, Flamingo Land Resort, Lake District Wildlife Park, New Forest Wildlife Park, Woburn Safari Park, and Yorkshire Wildlife Park in the UK; Otter-Zentrum Hankensbüttel, Tiergarten Nürnberg, Tiergarten Straubing, Wiesbaden, Wildpark Pforzheim, Zoologischer Stadtgarten Karlsruhe, and Zoo-Landau in Germany; Alpenzoo Innsbruck and Salzburg Zoo in Austria; and NaturOparC in France. They have either facilitated our image collection on-site or directly uploaded images to our WildTrack database. Their valuable contributions have been instrumental in our research efforts.

We would like to extend a special thanks to the International Otter Survival Fund (IOSF) for their continued support and advice. Further thanks also to Amy Fitzmaurice and Huayu Tsu for their invaluable advice when reviewing the draft article.

Finally, we would like to acknowledge the contributions of Hasty, a CloudFactory company, which provided us with the necessary data labeling infrastructure and model training computational capacity, without which this would not have been possible.

REFERENCES

Alibhai, S., Jewell, Z., and Evans, J. (2017). The challenge of monitoring elusive large carnivores: An accurate and cost-effective tool to identify and sex pumas (Puma concolor) from footprints. PLoS ONE, 12(3). https://doi.org/10.1371/journal.pone.0172065

Alibhai, S. K., Gu, J., Jewell, Z. C., Morgan, J., Liu, D., and Jiang, G. (2023). ‘I know the tiger by his paw’: A non-invasive footprint identification technique for monitoring individual Amur tigers (Panthera tigris altaica) in snow. Ecological Informatics, 73 (December 2022): 101947. https://doi.org/10.1016/j.ecoinf.2022.101947

Alibhai, S. K., Jewell, Z. C., and Law, P. R. (2008). A footprint technique to identify white rhino Ceratotherium simum at individual and species levels. Endangered Species Research, 4(1–2): 205–218. https://doi.org/10.3354/esr00067

Basnet, A., Prashant, G., Timilsina, Y., and Bist, B. (2020). Otter research in Asia: Trends, biases and future directions. Global Ecology and Conservation, 24. https://doi.org/10.1016/j.gecco.2020.e01391

Bhandari, J., and GC, D. B. (2008a). Preliminary Survey and Awareness for Otter Conservation in Rupa Lake, Pokhara, Nepal. Journal of Wetlands Ecology, 2. https://doi.org/10.3126/jowe.v1i1.1570

Bhandari, J., and GC, D. B. (2008b). Preliminary survey and awareness for otter conservation in Rupa Lake, Pokhara, Nepal. Journal of Wetlands Ecology, 2. https://doi.org/10.3126/jowe.v1i1.1570

Carl, C., Schönfeld, F., Profft, I., Klamm, A., and Landgraf, D. (2020). Automated detection of European wild mammal species in camera trap images with an existing and pre-trained computer vision model. European Journal of Wildlife Research, 66(4): 62. https://doi.org/10.1007/s10344-020-01404-y

Chen, P., Swarup, P., Matkowski, W. M., Kong, A. W. K., Han, S., Zhang, Z., and Rong, H. (2020). A study on giant panda recognition based on images of a large proportion of captive pandas. Ecology and Evolution, 10(7): 3561–3573. https://doi.org/10.1002/ece3.6152

Cianfrani, C., Broennimann, O., Loy, A., and Guisan, A. (2018). More than range exposure: Global otter vulnerability to climate change. Biological Conservation, 221: 103–113. https://doi.org/10.1016/j.biocon.2018.02.031

Danielsen, F., Jensen, P. M., Burgess, N. D., Altamirano, R., Alviola, P. A., Andrianandrasana, H., Brashares, J. S., Burton, A. C., Coronado, I., and Corpuz, N. (2014). A multicountry assessment of tropical resource monitoring by local communities. BioScience, 64(3) : 236–251. https://doi.org/10.1093/biosci/biu001

De Angelo, C., Paviolo, A., and Di Bitetti, M. S. (2010). Traditional versus multivariate methods for identifying jaguar, puma, and large canid tracks. The Journal of Wildlife Management, 74(5): 1141–1151. https://doi.org/10.2193/2009-293

De Silva, P. K. (2011). Status of Otter Species in the Asian Region Status for 2007: Proceedings of XIth International Otter Colloquium. IUCN Otter Specialist Group Bulletin, 28: 97–107. http://www.iucnosg.org/Bulletin/Volume28A/de_Silva_2011.html

Duplaix, N., and Savage, M. (2018). The global otter conservation strategy. In IUCN/SSC Otter Specialist Group, Salem. eScholarship, University of California. https://escholarship.org/uc/item/12d608qf

Evans, J. W., Evans, C. A., Packard, J. M., Calkins, G., and Elbroch, M. (2009). Determining Observer Reliability in Counts of River Otter Tracks. Journal of Wildlife Management, 73(3): 426–432. https://doi.org/10.2193/2007-514

Feeroz, M. M. (2015). Lutrogale perspicillata. IUCN Bangladesh. Red List of Bangladesh Volume 2: Mammals. IUCN, International Union for Conservation of Nature, Bangladesh Country Office, Dhaka, Bangladesh. p.76

. https://docslib.org/doc/418767/red-list-of-bangladesh-volume-2-mammals

Foster-Turley, P. (1992). Conservation Aspects of the Ecology of Asian Small- Clawed and Smooth Otters on the Malay Peninsula. IUCN Otter Specialist Group Bulletin, 7: 26–29. http://ww.otterspecialistgroup.org/Bulletin/Volume7/Foster_Turley_1992.pdf

Gomez, L., and Bouhuys, J. (2018). Illegal otter trade in Southeast Asia. TRAFFIC, Petaling Jaya, Selangor, Malaysia. https://www.traffic.org/publications/reports/illegal-otter-trade-in-southeast-asia/

Gu, J., Alibhai, S. K., Jewell, Z. C., Jiang, G., and Ma, J. (2014). Sex determination of Amur tigers (Panthera tigris altaica) from footprints in snow. Wildlife Society Bulletin, 38(3): 495–502. https://doi.org/10.1002/wsb.432

Hansen, M. F., Smith, M. L., Smith, L. N., Salter, M. G., Baxter, E. M., Farish, M., and Grieve, B. (2018). Towards on-farm pig face recognition using convolutional neural networks. Computers in Industry, 98: 145–152. https://doi.org/10.1016/j.compind.2018.02.016

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778. https://doi.org/10.1109/CVPR.2016.90

Jewell, Z. C., Alibhai, S. K., Weise, F., Munro, S., Van Vuuren, M., and Van Vuuren, R. (2016). Spotting Cheetahs: Identifying Individuals by Their Footprints. Journal of Visualized Experiments, 111, 1–11. https://doi.org/10.3791/54034

Jewell, Z. C., Alibhai, S., Law, P. R., Uiseb, K., and Lee, S. (2020). Monitoring rhinoceroses in Namibia’s private custodianship properties. PeerJ, 8, e9670. https://doi.org/10.7717/peerj.9670

Kistner, F., Slaney, L., Jewell, Z., Ben-david, A., and Alibhai, S. (2022). It’s Otterly Confusing-Distinguishing Between Footprints of Three of the Four Sympatric Asian Otter Species Using Morphometrics and Machine Learning. Otter (Journal of the International Otter Survival Fund), 8: 108 – 124. https://www.wildtrack.org/assets/documents/IOSF-JOURNAL-2022-Kistner-et-al-_108-125-1.pdf

Li, B. V., Alibhai, S., Jewell, Z., Li, D., and Zhang, H. (2018). Using footprints to identify and sex giant pandas. Biological Conservation, 218: 83–90. https://doi.org/10.1016/j.biocon.2017.11.029

Loshchilov, I., and Hutter, F. (2017). Decoupled weight decay regularization. ArXiv Preprint ArXiv:1711.05101. https://doi.org/10.48550/arXiv.1711.05101

Ponce-Martins, M., Lopes, C. K. M., de Carvalho-Jr, E. A. R., dos Reis Castro, F. M., de Paula, M. J., and Pezzuti, J. C. B. (2022). Assessing the contribution of local experts in monitoring Neotropical vertebrates with camera traps, linear transects and track and sign surveys in the Amazon. Perspectives in Ecology and Conservation, 20(4): 303-313. https://doi.org/10.1016/j.pecon.2022.08.007

Sharma, S., Chee-, W., Kannan, A., Rama, S., Patah, P. A.-, and Ratnayeke, S. (2022). Identification of three Asian otter species (Aonyx cinereus, Lutra sumatrana, and Lutrogale perspicillata) using a novel noninvasive PCR- RFLP analysis. Ecology and Evolution, 12, e9585. https://doi.org/10.1002/ece3.9585

Shrestha, M.B., Shrestha, G., Reule, S., Oli, S. and Ghartimagar, T. B. (2022). Presence of Evidence and Factors Affecting Distribution of Eurasian Otter (Lutra lutra) in the Pelma River, Rukum East, Nepal. Otter (Journal of the International Otter Survival Fund), 8: 70–83. https://otter.org/documents/journals/IOSF_Journal_Vol8_2022.pdf

Soe, M. M. (2022). Otter Records in Myanmar. Otter (Journal of the International Otter Survival Fund), 8: 43–50. https://otter.org/documents/journals/IOSF_Journal_Vol8_2022.pdf

Stevens, S., Organ, J.F. and Serfass, T.L. (2011) Otters as Flagships: Social and Cultural Considerations. Proceedings of Xth International Otter Colloquium, IUCN Otter Spec. Group Bull. 28A: 150 – 161 https://www.iucnosgbull.org/Volume28A/Stevens_et_al_2011.html

Wägele, J. W., Bodesheim, P., Bourlat, S. J., Denzler, J., Diepenbroek, M., Fonseca, V., Frommolt, K. H., Geiger, M. F., Gemeinholzer, B., Glöckner, F. O., Haucke, T., Kirse, A., Kölpin, A., Kostadinov, I., Kühl, H. S., Kurth, F., Lasseck, M., Liedke, S., Losch, F., Wildermann, S. (2022). Towards a multisensor station for automated biodiversity monitoring. Basic and Applied Ecology, 59: 105–138. https://doi.org/10.1016/j.baae.2022.01.003

Wäldchen, J., and Mäder, P. (2018). Machine learning for image based species identification. Methods Ecol Evol.,9: 2216–2225. https://doi.org/10.1111/2041-210X.13075

Willi, M., Pitman, R. T., Cardoso, A. W., Locke, C., Swanson, A., Boyer, A., Veldthuis, M., and Fortson, L. (2019). Identifying animal species in camera trap images using deep learning and citizen science. Methods in Ecology and Evolution, 10(1): 80–91. https://doi.org/10.1111/2041-210X.13099

Yoxon, P. (2022). Conservation of Endangered Otters and their habitats in Malaysia through education and a Training Workshop. Otter (Journal of the International Otter Survival Fund), 8:14–25. https://otter.org/documents/journals/IOSF_Journal_Vol8_2022.pdf

Yoxon, P., and Yoxon, G. M. (2017). Otters of the world. Whittles Publishing. ISBN-13 978-1849951296

Zang, H.-X., Su, H., Qi, Y., Feng, L., Hou, R., He, M., Liu, P., Xu, P., Yu, Y., and Chen, P. (2022). Ages of giant panda can be accurately predicted using facial images and machine learning. Ecological Informatics, 72: 101892. https://doi.org/10.1016/j.ecoinf.2022.101892

Résumé: Pouvez-vous reconnaître une Espèce à son Empreinte ? - Identification de Trois des Quatre Espèces de Loutres Sympatriques d’Asie du Sud-Est à l’Aide de la Vision par Ordinateur et de l’Apprentissage en Profondeur

L’Asie du Sud-Est abrite quatre espèces de loutres, présentant toutes une tendance démographique à la baisse. Les quatre espèces de loutres d’Asie du Sud-Est peuvent coexister en sympatrie et figurent sur la Liste rouge des espèces menacées de l’Union Internationale pour la Conservation de la Nature (UICN). Il existe des lacunes dans les connaissances de l’aire de répartition et la taille des populations de ces espèces furtives, informations essentielles à la mise en œuvre de mesures de conservation efficaces. Les empreintes peuvent constituer un moyen rentable et non invasif de collecter des données pertinentes. Wild Track a développé un modèle de classification de la Technologie d'Identification des Empreintes (TIE) qui utilise des mesures basées sur des points de repère comme données de départ. Ce modèle est très précis et permet de distinguer trois des quatre espèces de loutres d’Asie du Sud-Est. Dans cette étude, nous proposons une approche basée sur l'apprentissage en profondeur qui automatise la classification des espèces en analysant la zone des points de repère de délimitation placés autour des empreintes. Le procédé réduit considérablement le temps de traitement des données et permet de se passer d'opérateurs hautement qualifiés pour la localisation des points de repère sur les empreintes.

Pour tester le modèle, 2.562 images comportant 3.895 empreintes annotées ont été utilisées, ce qui a abouti à une exactitude, une précision et un retour impressionnants de 99 % sur les groupes d'entraînement et de test. De plus, les performances du modèle ont été testées sur un nouveau groupe de 431 empreintes, qui n'ont pas été utilisées dans le processus de formation, et seulement 4 d'entre elles n’ont pas été bien identifiées, démontrant l'efficacité de l'approche proposée sur des données inconnues. Les résultats de cette étude confirment la viabilité d’utilisation d’une approche basée sur un modèle d’apprentissage automatique pour identifier avec précision les espèces de loutres grâce à leurs empreintes. Cette approche est à la fois fiable et rentable, ce qui en fait un outil attrayant pour les efforts de suivi et de conservation des loutres en Asie du Sud-Est. De plus, la méthode présente un potentiel d’application important dans les programmes communautaires de suivi scientifique citoyen. Des recherches plus approfondies pourraient se concentrer sur l'élargissement de la portée de l'étude en ajoutant à la base de données de formation des empreintes de loutres de Sumatra ainsi que d'espèces sympatriques autres que les loutres. En outre, cette étude suggère de développer un modèle de détection d'objets et de former de nouveaux modèles de classification qui prédisent le sexe ou ré-identifient les individus à l'aide d'un plus grand nombre de représentations d'individus connus (en captivité).

Revenez au dessus

Resumen: ¿Se puede diferenciar Especies mediante una Huella? - Identificación de Tres de las Cuatro Nutrias Simpátricas del Sudeste Asiático utilizando Visión por Computadora y Aprendizaje Profundo

El Sudeste Asiático es hogar de cuatro especies de nutria, todas con tendencias poblacionales decrecientes. Las cuatro especies de nutria del Sudeste Asiático pueden co-existir simpátricamente y están en la Lista Roja de Especies Amenazadas de la Unión Internacional para la Conservación de la Naturaleza (UICN). Hay vacíos de conocimiento del área de distribución y los tamaños poblacionales de éstas especies elusivas, y ésa es información esencial para la implementación de medidas de conservación efectivas. Las huellas pueden ser una manera no invasiva y de bajo costo para colectar datos relevantes. WildTrack desarrolló un modelo de clasificación mediante Tecnología de Identificación de Huellas (TIH; FIT en inglés), que utiliza mediciones de puntos de referencia como datos de entrada. Éste modelo es altamente preciso para distinguir entre tres de las cuatro especies de nutria en el Sudeste Asiático. En éste estudio, proponemos un enfoque basado en aprendizaje profundo, que automatiza la clasificacióno de las especies analizando el área dentro de cuaddros delimitadores mínimos posicionados alrededor de las huellas. El método reduce significativamente el tiempo de procesamiento y elimina la necesidad de operadores altamente calificados que posicionen los puntos de referencia en las huellas.

Para entrenar al modelo, utilizamos 2.562 imágenes con 3.895 huellas, lo que resultó en una exactitud, precisión y sensibilidad impresionante, del 99% tanto en el entrenamiento como en los sets de prueba. Más aún, la performance del modelo fue testeada en un nuevo conjunto de 431 huellas, que no habían sido usadas en el proceso de entrenamiento, y solamente 4 de ellas fueron clasificadas incorrectamente, demostrando la efectividad del enfoque propuesto con datos no vistos previamente. Los hallazgos de este estudio confirman la viabilidad de utilizar un enfoque basado en modelos de aprendizaje automático para identificar especies de nutria con exactitud a través de sus huellas. Éste enfoque es confiable y de bajo costo, lo que lo hace una herramienta atractiva para el monitoreo de nutrias y los esfuerzos de conservación en el Sudeste Asiático. Además, el método tiene significativo potencial para ser aplicado en programas de monitoreo basados en ciencia ciudadana en comunidades. Ulteriores investigaciones podrían enfocarse en expandir el objeto de estudio agregando huellas de Nutria de Sumatra, así como especies simpátricas que no sean nutrias, a la base de datos de entrenamiento. Más aún, éste estudio sugiere la conveniencia de desarrollar un modelo de detección de objetos y entrenar nuevos modelos de clasificación que predigan el sexo ó re-identifiquen individuos utilizando un conjunto mayor de imágenes de individuos conocidos (de cautiverio).

Vuelva a la tapa